I wonder how TuringBot applies cross-validation:

My understanding about cross-vaidation is that it involves using the "training data" to optimize models, after which the remaining "test data" is used to evaluate, rank, and select models. This splitting reduces the risk of overfitting. In TuringBot, there is a tick box in the top right hand corner where we can toggle between showing the error metrics for the training and test data. All good so far...

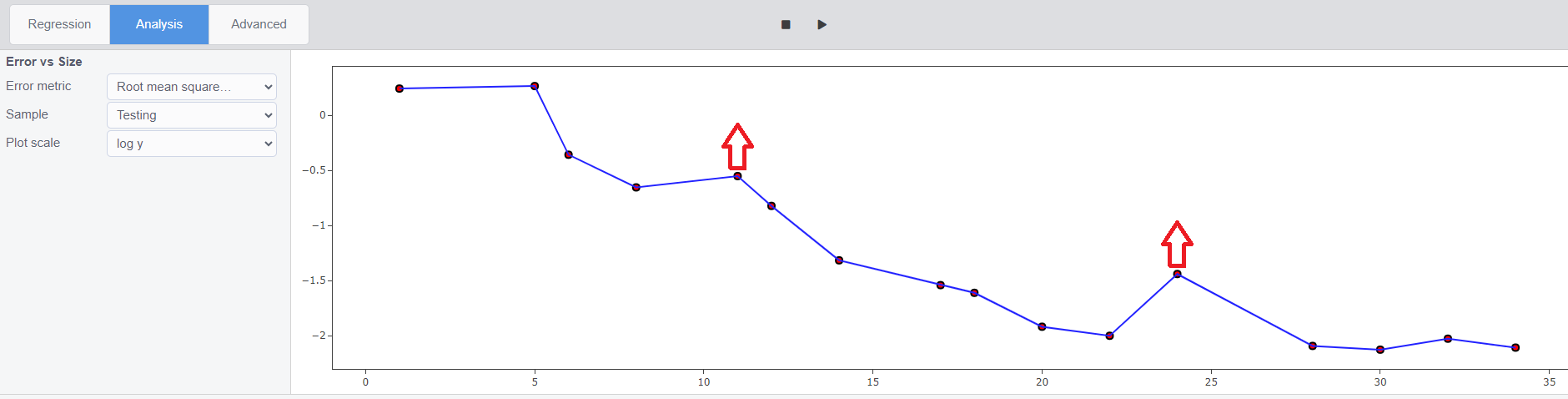

Question: How is cross-validation actually implemented in TuringBot? Does TuringBot select which equations to keep in the next iteration/generation based on the Pareto-front (size vs error metric) from the "training" data only, or all the data combined ("training" + "testing")? I ask this because I see that TuringBot clearly doesn't use the "testing" Pareto-front to select equations for the next iteration/generation. See the screen dump below, which shows peaks for candidate equations of size 11 and 24 have a worse error metric under "testing" than equations with smaller size. Note that in the case shown, I use a 80/20% split, and the same RMSE error metric for both Regression and Analysis.

Further thoughts: I understand that k-fold is the industry-standard for cross-validation, but is computationally demanding to implement in symbolic regression. A compromise can be to split the data three-ways ("training" + "validation" + "testing"), and to use the "training" data only to optimize the scalar equation parameters, then automatically select equations for next iteration/generation from the Pareto front of the error metric from the combined "training"+"validation" data, or the "validation" data alone. In this approach, the "testing" data can be holdout data, not submitted to the symbolic regression algorithm, but is intended for final evaluation by the user after terminating the regression (e.g. separately in a spreadsheet), to subjectively select the best parsimonious equation. How does TuringBot's method of cross-validation fit in this approach; is the "testing" data actually used for "validation"?

Ref. Wikipedia on Training+Validation+Testing