- Neural networks require large datasets and produce black-box predictions

- Symbolic regression outputs interpretable formulas—deployable without TensorFlow/PyTorch

- TuringBot ships as a native desktop app—no Python environment needed

- Often outperforms neural networks on tabular data with fewer samples

When to Choose Alternatives Over Neural Networks

| Situation | Neural Network | Alternative |

|---|---|---|

| Small dataset (<10k rows) | Prone to overfitting | Symbolic regression |

| Explainability required | Black box | Symbolic regression (formula) |

| Embedded deployment | MB of weights | Single equation (bytes) |

| Tabular data | Overkill | Random forest, XGBoost, or symbolic |

Alternatives Comparison

- Random Forests / XGBoost: Ensemble methods; good accuracy but black-box

- SVM: Effective for classification; limited interpretability

- k-NN: Simple but slow for large datasets; no model compression

- Symbolic Regression (TuringBot): Explicit formulas; fully interpretable; deployable anywhere

A noteworthy alternative

Among the alternatives above, all but symbolic regression involve implicit computations under the hood that cannot be easily interpreted. With symbolic regression, the model is an explicit mathematical formula that can be written on a sheet of paper, making this technique an alternative to neural networks of particular interest.

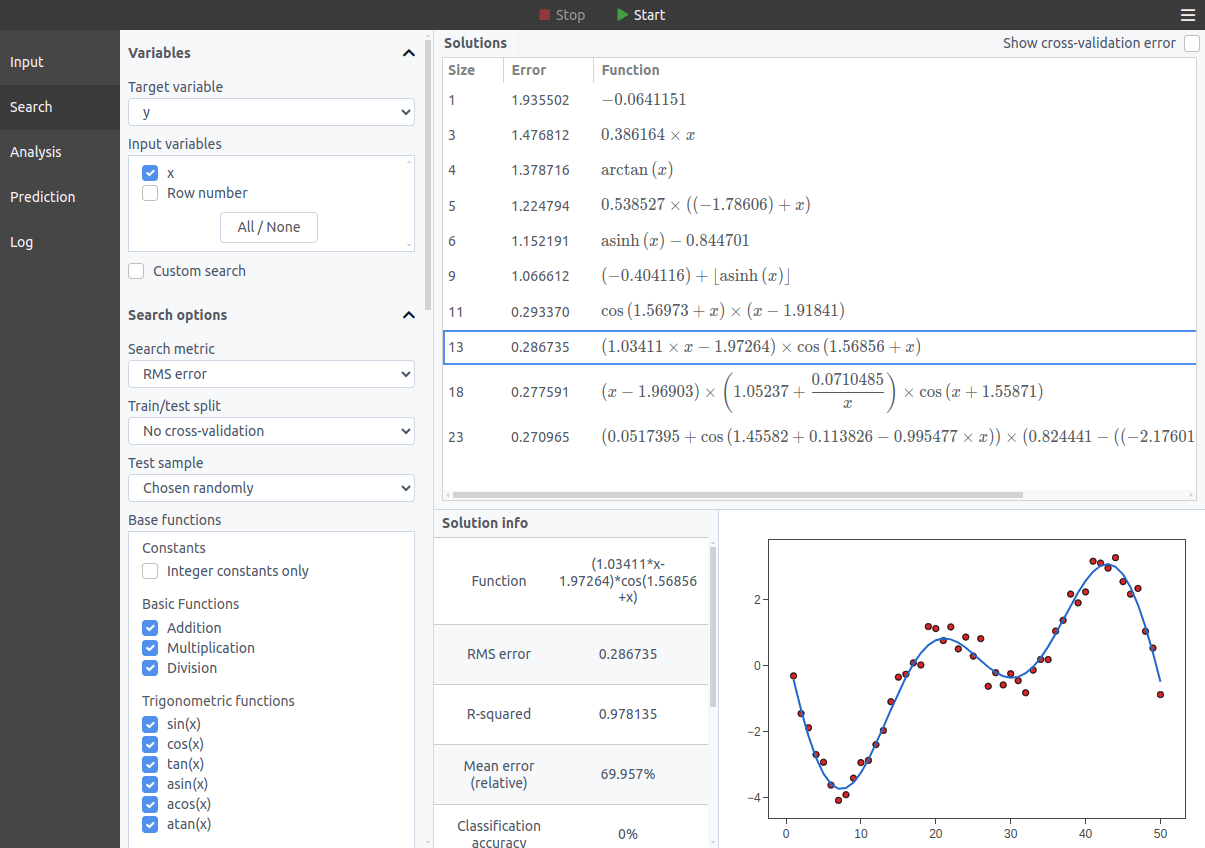

Here is how it works: given a set of base functions, for instance, sin(x), exp(x), addition, multiplication, etc, a training algorithm tries to find the combinations of those functions that best predict the output variable taking as input the input variables. The formulas encountered must be the simplest ones possible, so the algorithm will automatically discard a formula if it finds a simpler one that performs just as well.

Here is an example of output for a symbolic regression optimization, in which a set of formulas of increasing complexity were found that describe the input dataset:

This method very much resembles a scientist looking for mathematical laws that explain data, as Kepler did with data on the positions of planets in the sky to find his laws of planetary motion.

Conclusion

In this article, we have seen some alternatives to neural networks based on completely different ideas, including for instance symbolic regression which generates models that are explicit and more explainable than a neural network. Exploring different models is very valuable, because they may perform differently in different particular contexts.