Regression models are perhaps the most important class of machine learning models. In this tutorial, we will show how to easily generate a regression model from data values.

What regression is

The goal of a regression model is to be able to predict a target variable by taking as input one or more input variables. The simplest case is that of a linear relationship between the variables, in which case basic methods such as least squares regression can be used.

In real-world datasets, the relationship between the variables is often highly non-linear. This motivates the use of more sophisticated machine learning techniques to solve regression problems, including for instance neural networks and random forests.

A regression problem example is to predict the value of a house from its characteristics (location, number of bedrooms, total area, etc), using for that information from other houses that are not identical to it but for which the prices are known.

Regression model example

To give a concrete example, let’s consider the following public dataset of house prices: x26.txt. This file contains a long and uncommented header; a stripped-down version that is compatible with TuringBot can be found here: house_prices.txt. The columns that are present are the following

Index;

Local selling prices, in hundreds of dollars;

Number of bathrooms;

Area of the site in thousands of square feet;

Size of the living space in thousands of square feet;

Number of garages;

Number of rooms;

Number of bedrooms;

Age in years;

Construction type (1=brick, 2=brick/wood, 3=aluminum/wood, 4=wood);

Number of fireplaces;

Selling price.

The goal is to predict the last column, the selling price, as a function of all the other variables. To do that, we are going to use a technique called symbolic regression, which attempts to find explicit mathematical formulas that connect the input variables to the target variable.

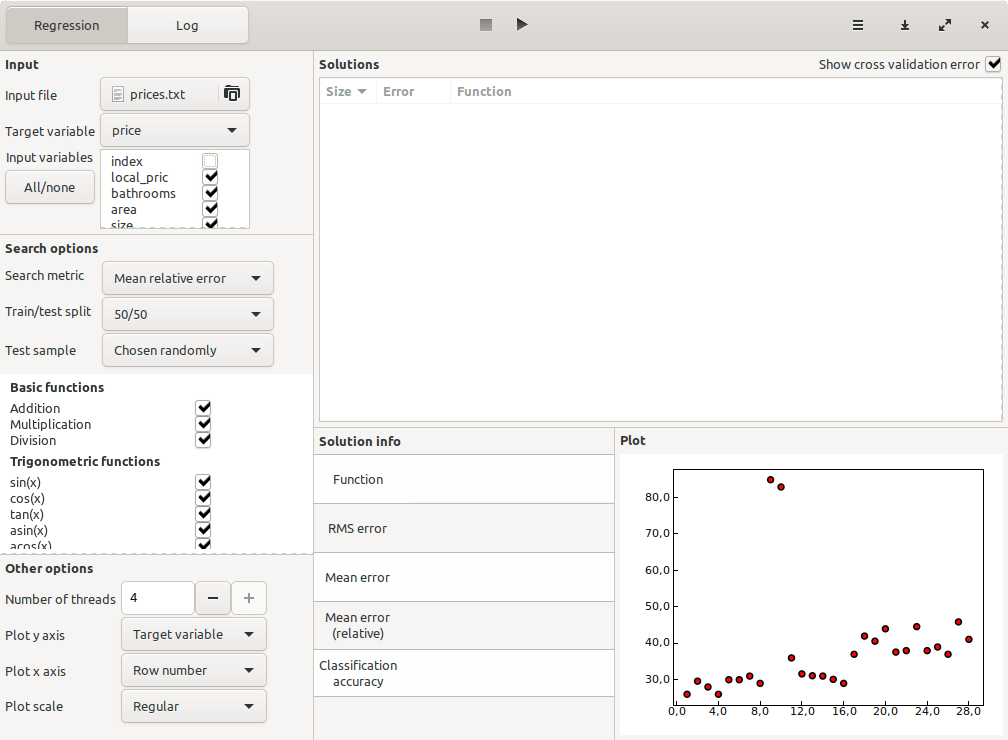

We will use the desktop software TuringBot, which can be downloaded for free, to find that regression model. The usage is quite straightforward: you load the input file through the interface, select which variable is the target and which variables should be used as input, and then start the search. This is what its interface looks like with the data loaded in:

We have also enabled the cross-validation feature with a 50/50 test/train split (see the “Search options” menu in the image above). This will allow us to easily discard overfit formulas.

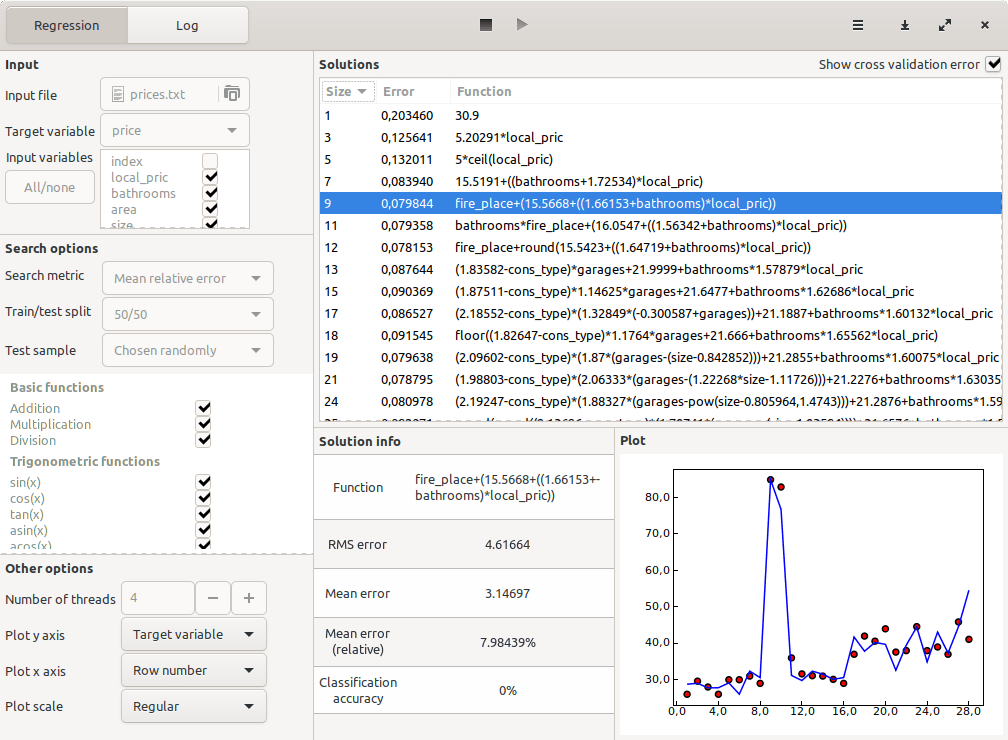

After running the optimization for a few minutes, the formulas found by the program and their corresponding out-of-sample errors were the following:

The highlighted one turned out to be the best — more complex solutions did not offer increased out-of-sample accuracy. Its mean relative error in the test dataset was roughly 8%. Here is that formula:

price = fire_place + 15.5668 + (1.66153 + bathrooms) * local_pric

The variables that are present in it are only three: the number of bathrooms, the number of fireplaces, and the local price. It is a completely non-trivial fact that the house price should only depend on these three parameters, but the symbolic regression optimization made this fact evident.

Conclusion

In this tutorial, we have seen an example of generating a regression model. The technique that we used was symbolic regression, implemented in the desktop software TuringBot. The model that was found had a good out-of-sample accuracy in predicting the prices of houses based on their characteristics, and it allowed us to see the most relevant variables in estimating that price.